The AI Killer: Why Dirty Data Will Bankrupt Your Agent

(Part 4 of the series: “The ERP Intelligence Evolution: From Data to Agents”)

In Part 1, we defined the terms.

In Part 2, we queried the data.

In Part 3, we explored the dangers of autonomous Agents.

Now, we must face the brutal reality that kills up to 85% of AI projects before they even start.

It’s not the algorithm. It’s not the GPU power. It’s your Master Data.

We all want the Self-Driving Enterprise, but ask yourself: would you engage the autopilot in a car if the sensors were covered in mud and the GPS map was from 1990?

That is exactly what we are doing when we unleash GenAI on a legacy ERP database full of duplicates, missing lead times, and DO NOT USE descriptions.

The “Garbage In” Multiplier Effect

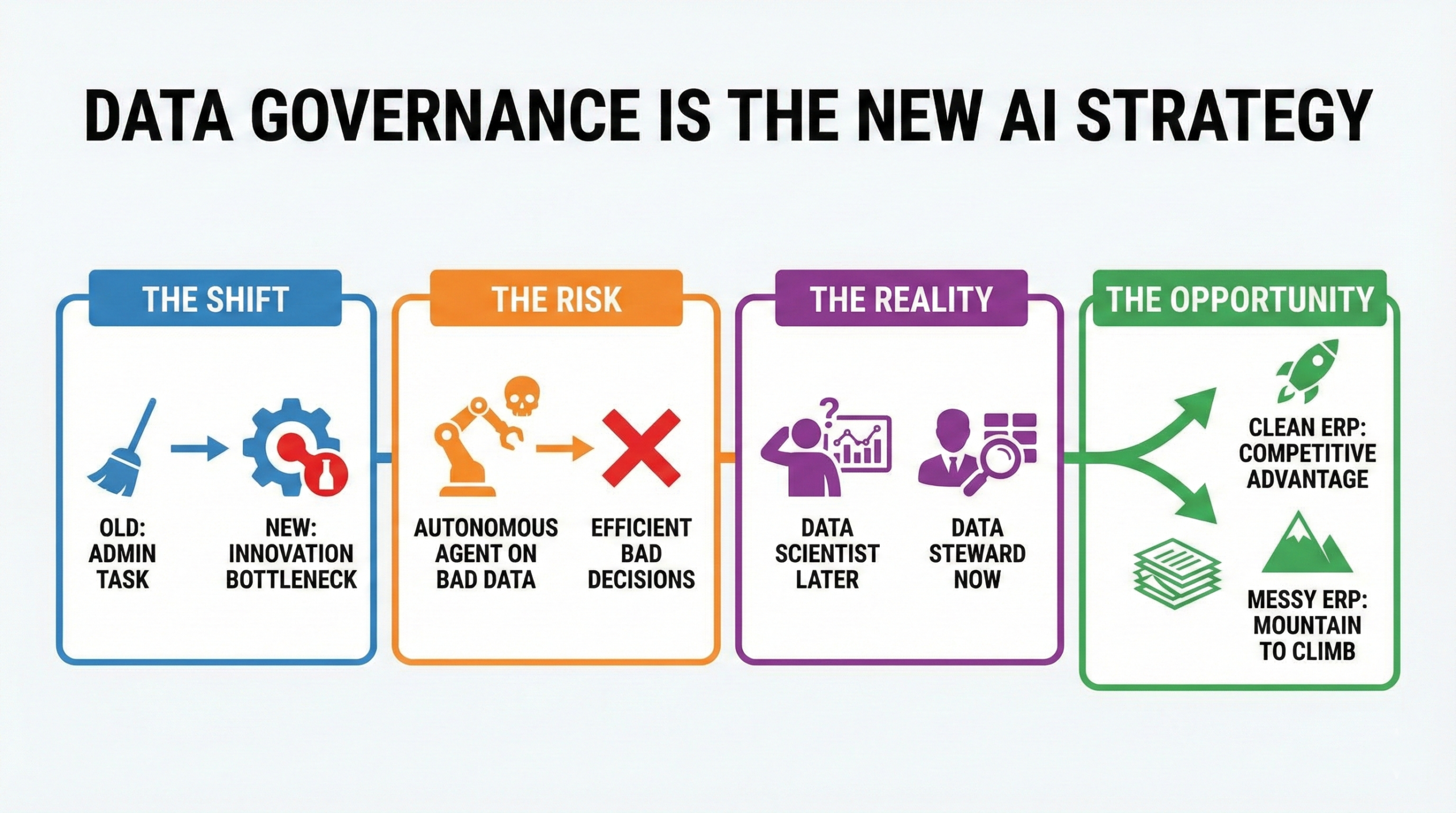

In the traditional ERP world, Garbage In, Garbage Out was a nuisance.

- Scenario: the lead time in the system has 0 days, but the real lead time is 30 days.

- Old Outcome: MRP suggests ordering too late.

The planner looks at it, laughs, ignores the system, and orders it manually based on tribal knowledge. - The Safety Net: The human was the error correction layer.

In the AI world, that safety net is gone. As McKinsey points out in their analysis of AI in supply chains, statistical models produce “unreliable results” when data has flaws, potentially costing organizations 8-12% in lost revenue.

- Scenario: the lead time is blank.

- New Outcome: the Supply Chain Agent sees 0 days.

It waits until the last minute. It fails to secure materials. It shuts down the production line. Or worse, it hallucinates a lead time based on a generic industry average that doesn’t apply to your specific, niche alloy.

Rule #1 of ERP AI: Artificial Intelligence assumes your data is truth.

It lacks the common sense to know that “Item A” and “Item A-OLD” are the same thing.

The Tribal Knowledge Gap

The biggest challenge for AI in ERP is that the real operating model often lives in people’s heads, not in the database tables.

- Explicit Data: what is in Infor LN / M3 (PO dates, Q.ty).

- Implicit Data: Supplier X says 2 weeks, but they always take 4 or Never ship sensitive electronics on Friday.

An LLM (Large Language Model) cannot read minds.

If you want an Agent to manage procurement, you must convert Implicit Knowledge into Explicit Data.

This means fields that were previously “nice to have” (like Vendor Performance Ratings, precise Lead Times, Safety Stock logic) are now mandatory.

Vectorization: the Band-Aid for Unstructured Data

There is a silver lining. Traditional ERPs struggled with unstructured data (PDF specs, email chains, comment fields).

Generative AI loves unstructured data.

Through a process called Vectorization (embedding), we can feed PDF manuals and technical specs into a Vector Database.

This allows an Agent to answer: “Do we have a motor compatible with these voltage specs?” by reading the attached PDF files.

However, this creates a new governance nightmare: If you have outdated PDFs attached to your Item Master, the AI will confidently recommend obsolete parts. The cleanliness of your documents is now just as important as the cleanliness of your rows and columns.

The Roadmap to AI Readiness: Clean Up or Give Up

Before you buy an AI license, you need a Data Strategy. According to MIT Sloan Management Review, only 24% of companies currently describe themselves as “data-driven,” highlighting the massive gap between ambition and reality.

- De-duplicate Master Data

Agents cannot optimize inventory if the same bearing exists as three different item codes.

To fix this, you must aggressively consolidate Item Master and Business Partner records to ensure the AI sees a “single version of the truth.” - Enrich the Meta-Data

AI needs context to function effectively.

A code like “Item 10202” means nothing to an LLM without rich descriptors.

To enable intelligence, you must ensure descriptions are standardized, attributes are completely filled, and technical classifications are strictly applied across the board. - Kill the “Free Text” Addiction

Stop putting critical instructions in text notes or unstructured comments.

Business logic must be moved into structured fields where the ERP logic, and the Agent, can verify and act upon it reliably, rather than guessing the intent behind a handwritten note.

The evolution from Data to Intelligence to Agents is inevitable. The tools are here and the vision is clear. But the fuel for this engine is credibility. And credibility comes from one place: the accuracy of the record.

Start cleaning.

Key Sources & Further Reading

- Gartner: Why 85% of AI Projects Fail – Predicts high failure rates due to erroneous data, bias, or management issues.

- Harvard Business Review: Data Readiness for the AI Revolution – 91% of leaders say a reliable data foundation is essential, but only 55% trust their current data.

- MIT Sloan: Making the most of AI – Identifies culture and data literacy as the primary obstacles to AI success.

Next Up: we have the technology, and we (hopefully) have the clean data. But what happens to people? In the final part of this series, we explore the Human Evolution: why the ERP Expert of tomorrow will stop doing data entry and start doing Algorithm Auditing.

Written by Andrea Guaccio

January 7, 2026