AI Agents vs SaaS Business Model: Why the Saaspocalypse is Wrong

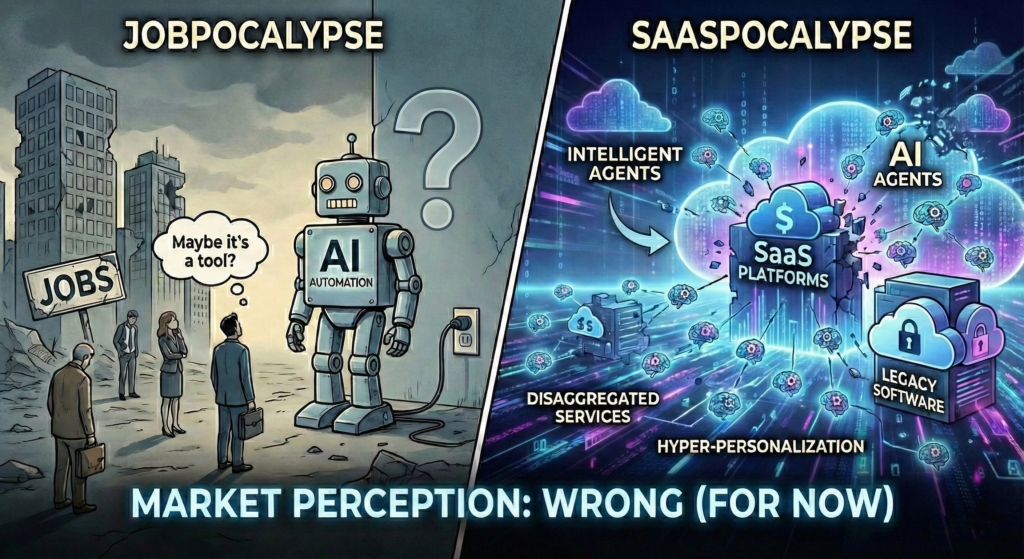

If you have been watching the markets last week, you have likely witnessed the carnage. Major players in the SaaS (Software as a Service) landscape, such as Salesforce, ServiceNow, and large IT consultancies, have taken a massive hit. The narrative driving this sell-off is catchy, terrifying, and seemingly inevitable: the “Saaspocalypse” is finally here.

The catalyst for this panic is the rapid evolution of “agentic” capabilities in Artificial Intelligence. Specifically, the launch of Anthropic’s latest Cowork Features, which introduces agents capable of working alongside humans in shared environments, has investors terrified.

The logic seems sound on the surface: If an AI agent can navigate the complex menus of SAP, Infor LN, or Salesforce better, faster, and cheaper than a human, why would any company continue to pay for expensive per-seat licenses?

Why hire a junior consultant to click buttons and configure tables when an agent can do it for a fraction of a cent per token?

The market is pricing in a future where software revenue collapses because AI cannibalizes the user base. But as someone who has spent over a decade implementing ERP systems, working on complex multinational environments such as Infor LN, I see a massive, dangerous gap between financial panic and technical reality.

The Economic Fear vs. The Technical Reality

To understand why the market is wrong, we first need to understand what they are afraid of. The SaaS business model is predicated on seats, where you charge a monthly fee for every human who needs access to the system.

If an AI agent can log in as a single “super-user” and perform the work of 50 customer service reps or 10 supply chain planners, the revenue model for these software giants evaporates.

However, this fear assumes one critical thing: that the AI agents actually work.

Not just “work” in a demo where they book a flight or summarize a PDF. I mean work in the messy, high-stakes environment of enterprise resource planning, where a single wrong click can cost millions.

While Wall Street was selling, the scientific community issues serious warnings.

The latest International Scientific Report on the Safety of Advanced AI, released just days ago by the UK government’s AI Safety Institute, draws a stark conclusion that every CTO should print out and tape to their wall:

“Reliable automation of long or complex tasks remains infeasible.” (Source: International Scientific Report on the Safety of Advanced AI)

This is the “dirty little secret” of the current AI hype cycle. An agent that works 90% of the time is a miracle for creative tasks. If you ask ChatGPT to write a poem and it messes up a rhyme, nobody dies. But in my world of ERP implementation and mission-critical business processes, 90% accuracy is a disaster.

The Governance Gap: A Race Without Brakes

Beyond technical reliability, the report highlights a structural risk that the market’s superficial analysis completely ignores. It concludes that there is a widening gap between the pace of AI advancement and society’s capacity to create effective safety and governance measures.

In the corporate world, this gap is fatal. ERP systems are the backbone of compliance, taxation, and financial reporting. You cannot deploy “move fast and break things” technology into systems designed for stability and auditability. The hype assumes we can unleash autonomous agents today; the reality is that we lack the governance frameworks to even monitor what they are doing, let alone control them.

The Hidden Risk: The Death of the Apprenticeship Model

There is a darker, more structural reason why consultancy firms specifically are seeing their stock punished. The issue extends far beyond software licenses to the destruction of the talent pipeline.

For decades, the consultancy business model has relied on a “pyramid”: armies of junior consultants do the low-level configuration and data entry work (billed to the client) while learning the trade. They “shadow” the seniors to understand the complex logic of business processes.

If we replace these juniors with AI agents to save money today, we create a knowledge vacuum tomorrow.

A recent analysis on the crisis of the junior developer highlights a terrifying question: If no one does the grunt work, how does anyone become a senior?

You cannot learn to manage a complex supply chain crisis in Infor LN if you haven’t spent years understanding the basic transactions that caused it.

The market is rightfully scared that by automating the entry-level, we are cutting off the supply of future experts, leaving companies with fast AI agents but no humans capable of understanding if the agent is right or wrong.

The Build-It-Yourself Fallacy

Another dangerous myth fueling this market volatility is the idea that tools like Project Genie or Claude will allow every company to simply “build their own ERP” in-house, bypassing expensive SaaS vendors entirely.

This view fundamentally misunderstands what software is.

Writing code is not the same as building a system.

Think of the video game industry. Generative AI can create 3D models and write scripts, but that doesn’t mean a teenager in their bedroom can generate Elden Ring or Grand Theft Auto overnight. A masterpiece game requires level design, narrative pacing, physics engines, and optimization. A complex structure where code is just one brick.

The same applies to ERPs and CRMs.

An ERP isn’t just a collection of Python scripts; it is a crystallized structure of international tax laws, supply chain compliance, security protocols, and accounting best practices. Thinking a company will throw away SAP or Salesforce to build a “home-made” AI CRM is madness. It underestimates the complexity of the processes embedded in these tools.

Recent reports from SupplyChainBrain (Feb 2026) confirm this: leaders are already realizing that maintaining custom AI agents is a nightmare and are pivoting back to established vendors.

Furthermore, we must not forget human inertia. I have spent a large part of my current career migrating companies from On-Premise to Cloud. It is a transition that technically should take months, yet industry-wide, it has taken decades and is still ongoing. Why? Because humans and organizations change slowly. The fantasy that corporate habits will pivot overnight just because a new AI model was released ignores the reality of how businesses actually operate.

The Agent Delusion

This leads us to a critical misunderstanding in the industry. We often blame the AI for these failures, but the problem lies deeper.

As I explored in my recent article, The Agent Delusion: Why Your ERP Isn’t Ready for Autopilot (Yet), there is a brutal reality that kills up to 85% of AI projects before they even start.

The delusion is thinking you can layer a 21st-century autonomous intelligence on top of 20th-century static, dirty data structures and expect magic. You cannot build a Ferrari engine (the AI Agent) and put it inside a rusted chassis (your legacy ERP data) and expect to win the race. The agent doesn’t just need to be smart; the environment it operates in must be ready for intelligence. Most ERPs simply aren’t.

The Era of Agentic Engineering

So, does this mean the Saaspocalypse is a myth? No. It means the timeline and the nature of the change are misunderstood. We are not heading for a world where software runs itself tomorrow.

We are heading for a world where the role of the human shifts dramatically.

Andrej Karpathy, one of the leading minds in AI (formerly of OpenAI and Tesla), recently noted that the era of Vibe Coding, where you just ask an AI to write code and hope for the best, is over.

We are entering the era of Agentic Engineering.

For professionals like us, including consultants, developers, and system architects, the job isn’t disappearing, but rather becoming significantly harder.

We are becoming the architects and supervisors of AI agents.

“Agentic Engineering” means building the scaffold that keeps these agents safe. It involves:

- Evaluation Frameworks: profound testing suites to ensure the agent doesn’t hallucinate inventory numbers.

- Guardrails: Hard-coded limits (e.g., “The AI cannot approve a Purchase Order over 10,000 Euro without human sign-off”).

- Human-in-the-Loop Workflows: Designing processes where the AI does the heavy lifting of data entry, but a human expert (the “pilot”) validates the critical decision points.

The Shift from “Seats” to “Outcomes”

The business model will change. The per-seat model is indeed threatened, but not because no one will use the software. It is threatened because the value metric is shifting.

We are already seeing the first concrete signs of this pivot. Salesforce has begun adapting to this reality with the launch of Agentforce, introducing a pricing model based on $2 per conversation rather than just user seats. They realized that selling login licenses for autonomous bots makes no sense; they must sell the outcome of the work.

This aligns with what Bessemer Venture Partners defines as the rise of “Service-as-a-Software.” In this new paradigm, vendors don’t just sell you a tool (a CRM or ERP); they sell you the digital labor itself.

In the future, it is probable that Infor or SAP won’t just charge you for how many people log in. They will likely charge you for outcomes:

- “Cost per invoice processed.”

- “Cost per successful customer support resolution.”

- “Cost per optimized supply chain plan.”

This shift is actually beneficial for the providers if they can crack the reliability code. But until they do, the reliance on human expertise remains absolute.

Why Experience Matters More Than Ever

There is a fear that AI levels the playing field, making a junior consultant with ChatGPT equal to a senior expert. I argue the opposite.

When you use a tool that lies confidently, you need more expertise to catch the lie, not less.

A junior consultant might accept the AI’s suggestion to change a General Ledger mapping because “it looks right.” A senior consultant knows that changing that mapping will break the year-end fiscal report because of a local tax regulation the AI’s training data (mostly US-centric) knows nothing about.

While the Jobpocalypse narrative assumes that our value lies in typing speed or memory, our true value lies in judgment.

My Bottom Line

The market is pricing Companies based on the potential of AI, ignoring the engineering reality of implementation.

Real engineering deals with edge cases. Real engineering deals with dirty data, legacy integrations, and contradictory business rules. Until AI agents can prove reliability at scale without constant human hand-holding, which the scientific community says is still far off, the SaaS model is just under pressure to evolve rather than facing extinction.

The future lies in Humans managing Agents, rather than a battle of AI vs. Humans.

Written by Andrea Guaccio

February 09 2026