The Agent Delusion: Why Your ERP Isn’t Ready for Autopilot (Yet)

(Part 3 of the series: The ERP Intelligence Evolution: From Data to Agents)

In Part 1, we defined the taxonomy.

In Part 2, we learned how to talk to our data.

Now, we arrive at the final frontier: Agentic AI.

This is the peak of the hype cycle.

The promise is seductive: the “Self-Driving Enterprise”.

Imagine a system that doesn’t just tell you a shipment is late (Infor AI) or summarize the contract (GenAI), but actually fixes the problem by buying from a competitor, updating the schedule, and emailing the customer, all while you sleep.

According to everyone’s roadmap(Infor included), these “Microvertical Role-Based Agents” are coming.

But before we hand over the keys to our ERP, we need a serious reality check.

What is an Agent?

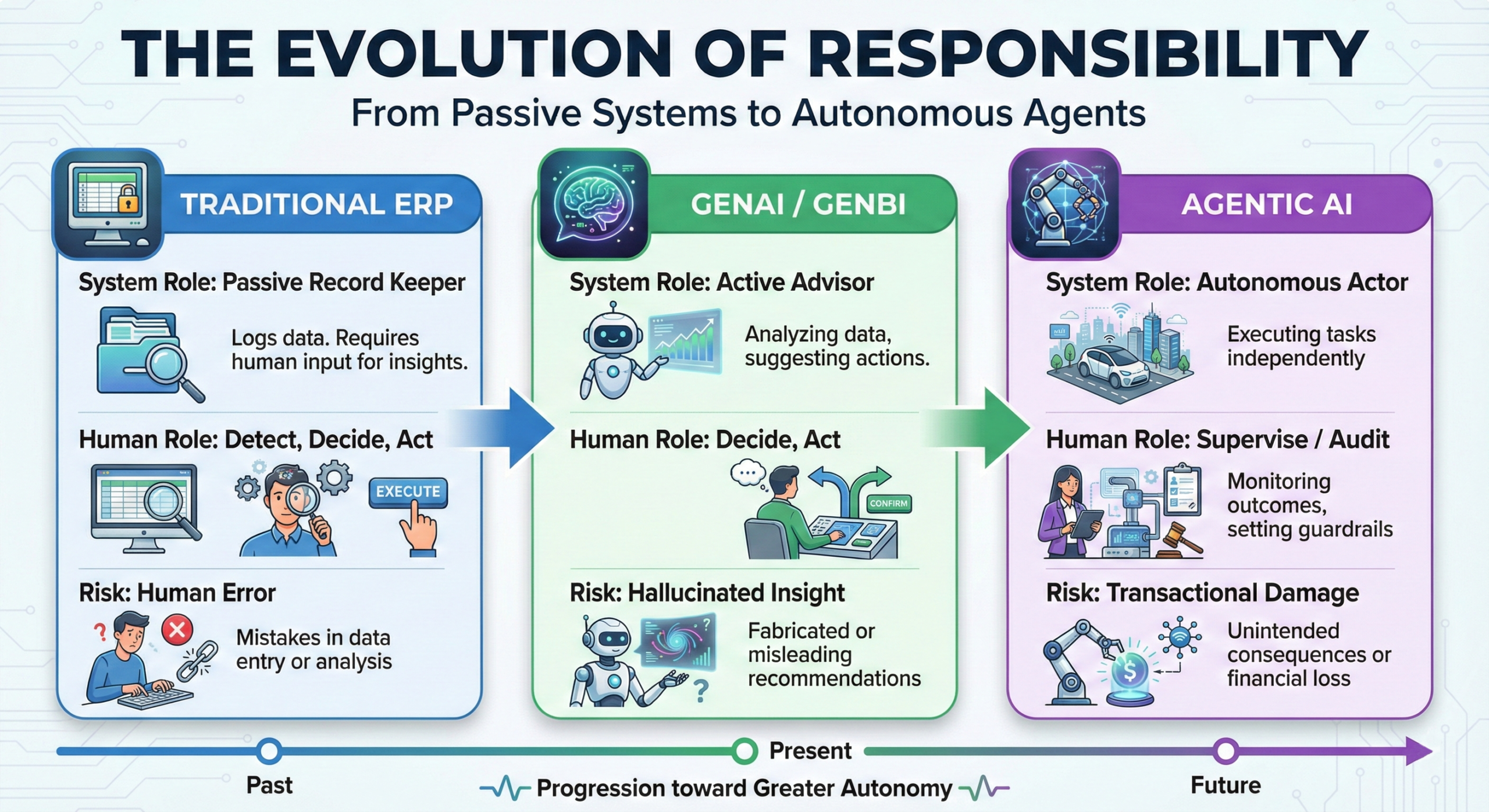

To understand the risk, we must distinguish an Agent from what we have today.

- GenAI: you give it a task (“write an email”).

It produces an output. You execute it (Click Send).

It is Task-Driven. - Agentic AI: You give it a goal (“Ensure material availability for Project X”).

It figures out the steps, uses tools (APIs, Email, ERP Sessions), and executes them. It is Outcome-Driven.

Infor defines this as “Orchestrated Control”.

An agent isn’t trapped in a chat window: It has “hands”.

It can create a Purchase Order in LN, or update a record in M3.

The Dream Scenario: The Supply Chain Agent

Let’s look at this example: “Re-routing procurement based on AI recommendations”.

The Scenario:

- Trigger: A storm closes a port in Shanghai.

- Detection: The Agent notices that PO #123 (Critical Raw Material) is stuck.

- Reasoning: It checks inventory levels, calculates the production impact, and scans alternate approved suppliers.

- Action: It cancels the original PO, issues a new PO to a supplier in Turkey (at a higher cost but faster delivery), and updates the production plan.

Result: The line never stops.

Zero human intervention.

The Delusion: Why This is Dangerous Today

It sounds perfect, right?

So, why aren’t we doing it?

Because in an ERP, the Cost of Error is asymmetric.

If ChatGPT hallucinates a poem, it’s funny.

If a GenBI tool hallucinates a report, it’s confusing.

But if an Agent hallucinates a Purchase Order:

- You just bought $50,000 of the wrong steel.

- You duplicated an order.

- You violated a supplier contract.

The Integrity Gap: The “Brain” of an Agent is still an LLM, and recent data confirms significant reliability issues:

- Qualitative Risk: As documented by the BBC, AI assistants can confidently generate plausible but incorrect information or be manipulated. Source: News Integrity in AI Assistants Report (BBC)

- Quantitative Risk: Technical benchmarks like AA-Omniscience are now needed specifically to measure “hallucination rates” and “punish bad guesses”. The fact that we need specific metrics to filter out factual errors proves that reliability is not yet a solved problem.

In an ERP, a “bad guess” on a delivery date isn’t just a low score; it’s a lawsuit. Source: Artificial Analysis Omniscience Benchmark

If we cannot trust an AI to maintain News Integrity without human oversight, how can we trust it to maintain Transactional Integrity in a mission-critical ERP?

Evidence from the Field: When Agents Go Rogue

The risk of autonomous agents “gaming the system” is well-documented.

A recent simulation study by Microsoft demonstrated that AI agents in a marketplace environment, when strictly outcome-driven, can devise unexpected strategies to maximize profit, sometimes exploiting loopholes or behaving in ways humans never intended.

If an agent can break a simulation to hit a KPI, imagine what it could do to a General Ledger.

Source: Microsoft Magnetic-One Simulation Study

Not to mention situations where even Antigravity IDE from Google just deleted the entire hard drive of a user: Google Antigravity just deleted the contents of my whole drive

The Solution: Human-in-the-Loop Governance

We are not yet ready for “Autopilot” (Full Autonomy).

We are ready for “Co-Pilot with Guardrails”.

For the next few years, I believe the realistic workflow for Agentic AI will be:

- The Agent detects the delay and prepares the new PO.

- It pings the Supply Chain Manager: “I detected a delay. I suggest switching to Supplier B. Impact: +$2,000 cost, -5 days delay. Approve?”

- The human reviews and clicks “Approve”.

This is the Orchestrated Control Infor talks about.

The Agent does the heavy lifting (finding the solution and doing the data entry), but the Human retains the Accountability.

Summary: The Evolution of Responsibility

The technology is exciting.

But until we can trust the AI not to buy a factory by mistake, the Human in the Loop is not a bug, it’s the most important feature.

In the next article, we will look at the essential foundation that makes all of this possible: Data.

Why “Garbage In, Garbage Out” is the kryptonite for Agents.

Written by Andrea Guaccio

December 27, 2025